Threading Model

We use an M:N threading model for all languages we support.

Because Java has used the thread-per-request model for so long, most libraries that deal with I/O will block.

If we take Java as an example, it used the thread-per-request model for a long time. Each thread is mapped 1:1 with a kernal thread, and costs about 1MB (roughly 200-300KB) * .

1 thread per channel. Multiple connections per channel Each thread roughly 100mb* (Review) of ram

We can directly control variable ram above the fixed static storage

I/O bound or CPU bound

IO Bound gets the information from the network

In memory bound systems, getting the number of RPS is making the following calculation:

RPS = (Total RAM/ worker memory) * (1 / Task time)

There are many CPU bound frameworks to help optimize computations, and since CPU bound mechanics live within a single service and do not span the network, we do not focus on it.

We focus instead on IO more specifically network IO.

Network and Disk IO.

When deciding on our threading model, we can either use the 1:1 threading model or the M:N threading model. Across all of our supported languages, we use the M:N threading model. This allows us to move many items to the userspace as opposed to the kernal space.

The challenge is that the M:N model is wrought with peril and difficult to get right. We have leveraged the great work from some brilliant minds to accomplish this.

old saying: scalability is specialization. To build something great, we can’t outsource performance or security.

performance and scalability or orthogonal concepts

“non-blocking” concurrency

concurrency vs parallelism

- Reduce thread contention

Kernel threads

User threads

Lanugages such as Java and CSharp introduce a “manual” non-blocking programming model with callback functions. There are frameworks that make it easier to use.

Languages like go take a very different approach of removing threads from being managed within kernal space and managed within user space.

This approach is called green threads or user threads.

The kernel isn’t the solution. The kernel is the problem.

Which means:

Don’t let the kernel do all the heavy lifting. Take packet handling, memory management, and processor scheduling out of the kernel and put it into the application, where it can be done efficiently. Let Linux handle the control plane and let the the application handle the data plane.

learn how it’s done we turn to Robert Graham, CEO of Errata Security,

Back pressure

When the operating system manages blocking I/O, it nativel handles backpressure. However, within our threading model, we must manually handle this for non-blocking I/O.

This is important for certain denial of service attacks.

UDID Generation

UUID generation is mostly blocking. java.util.UUID.randomUUID()

synchronized public void nextBytes(byte[] bytes) {

secureRandomSpi.engineNextBytes(bytes);

}

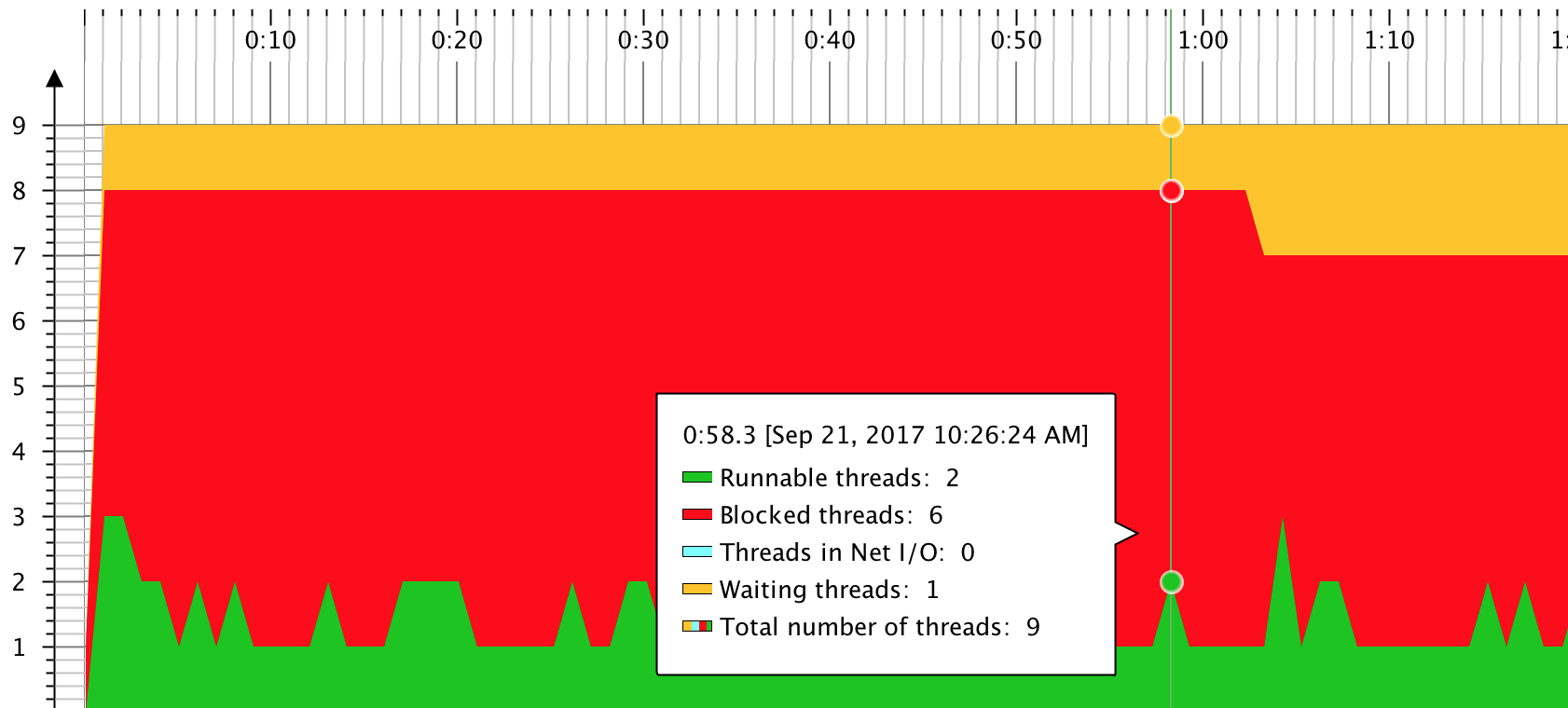

![[Pasted image 20220517061855.png]]

A Spec Compliant library processes requests in parallel and asynchronously without waiting for the results. The results are processed in a different callback thread as they arrive. An async requests uses a dedicated connection or binding to a server over a channel.

An event loop is a design pattern https://en.wikipedia.org/wiki/Event_loop

EventPlane Loop

Numer of event loops: By default we calculate the number of CPU cores available to the application and create an event loop for each core.

Concurrency level: Within each event loop we capture the concurrency level. If this number is too large, then it would result in request timeouts and other errors.

Delay Queue Buffer: vv

Throttling: Based on the concurrency level of the event loop, we wait for an available slot

Rewrite this:

Execution Model:

EventPlane loops: An event-loop represents the loop of “submit a request” and “asynchronously process the result” for concurrent processing of events or requests. Multiple event loops are used to leverage multiple CPU cores. Listener: The listener encapsulates the processing of results. Listener types: Depending on the expected number of records in the result and whether they arrive at once or individually, different listener types are to be used such as a single record listener, a record array listener, or a record sequence listener. Completion handlers: A single record or record array is processed with the success or failure handler. In a record sequence listener, each record is processed with a “record” handler, whereas the success handler is called to mark the end of the sequence.

Application Call Sequence

The application is responsible for spreading requests evenly across event loops as well as throttling the rate of requests if the request rate can exceed the client or server capacity. The call sequence involves these steps (see the code in Async Framework section below):

Initialize event loops. Implement the listener with success and failure handlers. Submit requests across event loops, throttling to stay below maximum outstanding requests limit. Wait for all outstanding requests to finish.

Understanding EventPlane Loops

Let’s look at the key concepts relating to event loops. As described above, an event loop represents concurrent submit-callback processing of requests. See the code in Async Framework section below.

Number of event loops: In order to maximize parallelism of the client hardware, as many event loops are created as the number of cores dedicated for the Aerospike application. An event pool is aligned with a CPU core, not to a server node or a request type.

Concurrency level: The maximum concurrency level in each event loop depends on the effective server throughput seen by the client, and in aggregate may not exceed it. A larger value would result in request timeouts and other failures.

Connection pools and event loops: Connection pools are allocated on a per node basis, and are independent of event pools. When an async request needs to connect to a node, it uses a connection from the node’s connection pool only for the duration of the request and then releases it.

Connection pool size: Concurrency across all loops must be supported by the number of connections in the connection pool. The connection pool per node should be set equal to or greater than the total number of outstanding requests across all event loops (because all requests may go to the same node in the extreme case).

Delay queue buffer: To buffer a temporary mismatch in processing and submission rates, there is a delay queue buffer in front of an event loop where requests are held until an async request slot becomes available in the event loop. The queued request is automatically assigned to a slot and processed without involvement of the application.

Throttling: The delay queue cannot buffer a long running mismatch in submission and processing speeds, however, and if the wait queue fills up, a request will not be accepted and the client will return “delay queue full” error. The application should throttle by keeping track of outstanding requests and issue a new request when an outstanding one finishes. If delay queue size is set to zero, throttling must also be handled in the application code.